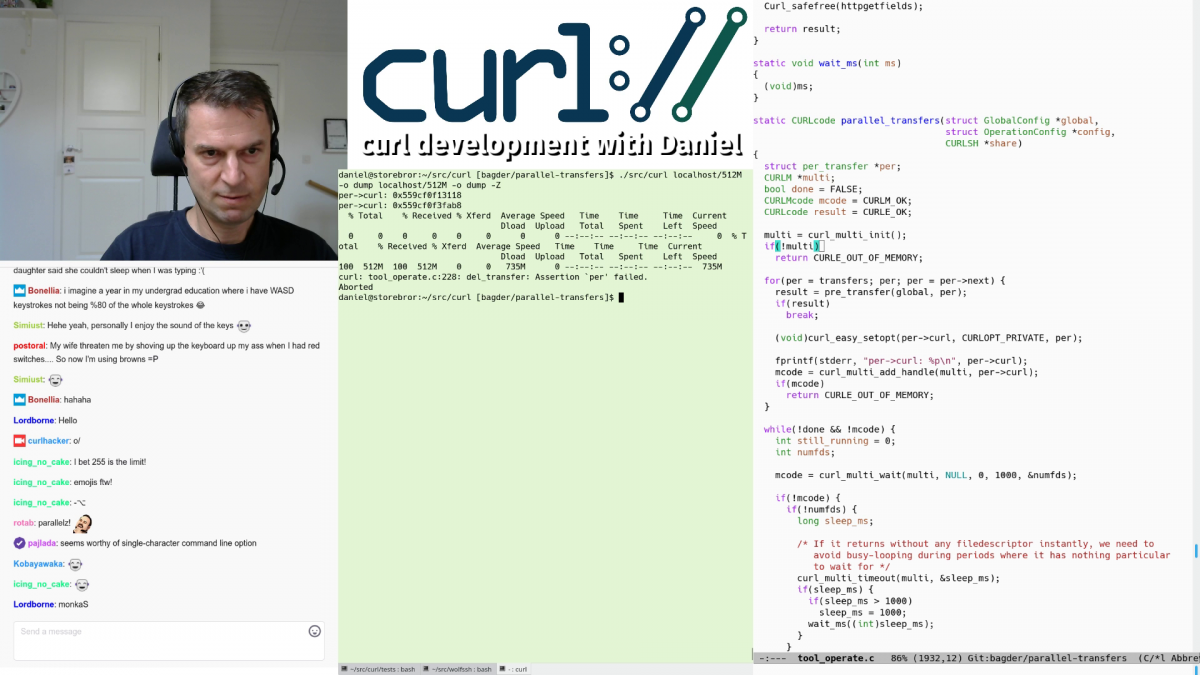

As some of you already found out, I’ve tried live-streaming curl development recently. If you want to catch previous and upcoming episodes subscribe on my twitch page.

Why stream

For the fun of it. I work alone from home most of the time and this is a way for me to interact with others.

To show what’s going on in curl right now. By streaming some of my development I also show what kind of work that’s being done, showing that a lot of development and work are being put into curl and I can share my thoughts and plans with a wider community. Perhaps this will help getting more people to help out or to tickle their imagination.

For the feedback and interaction. It is immediately notable that one of the biggest reasons I enjoy live-streaming is the chat with the audience and the instant feedback on mistakes I do or thoughts and plans I express. It becomes a back-and-forth and it is not at all just a one-way broadcast. The more my audience interact with me, the more fun I have! That’s also the reason I show the chat within the stream most of the time since parts of what I say and do are reactions and follow-ups to what happens there.

I can only hope I get even more feedback and comments as I get better at this and that people find out about what I’m doing here.

And really, by now I also think of it as a really concentrated and devoted hacking time. I can get a lot of things done during these streaming sessions! I’ll try to keep them going a while.

Twitch

I decided to go with twitch simply because it is an established and known live-streaming platform. I didn’t do any deeper analyses or comparisons, but it seems to work fine for my purposes. I get a stream out with video and sound and people seem to be able to enjoy it.

As of this writing, there are 1645 people following me on twitch. Typical recent live-streams of mine have been watched by over a hundred simultaneous viewers. I also archive all past streams on Youtube, so you can get almost the same experience my watching back issues there.

I announce my upcoming streaming sessions as “events” on Twitch, and I announce them on twitter (@bagder you know). I try to stick to streaming on European day time hours basically because then I’m all alone at home and risk fewer interruptions or distractions from family members or similar.

Challenges

It’s not as easy as it may look trying to write code or debug an issue while at the same time explaining what I do. I learnt that the sessions get better if I have real and meaty issues to deal with or features to add, rather than to just have a few light-weight things to polish.

I also quickly learned that it is better to now not show an actual screen of mine in the stream, but instead I show a crafted set of windows placed on the output to look like it is a screen. This way there’s a much smaller risk that I actually show off private stuff or other content that wasn’t meant for the audience to see. It also makes it easier to show a tidy, consistent and clear “desktop”.

Streaming makes me have to stay focused on the development and prevents me from drifting off and watching cats or reading amusing tweets for a while

Trolls

So far we’ve been spared from the worst kind of behavior and people. We’ve only had some mild weirdos showing up in the chat and nothing that we couldn’t handle.

Equipment and software

I do all development on Linux so things have to work fine on Linux. Luckily, OBS Studio is a fine streaming app. With this, I can setup different “scenes” and I can change between them easily. Some of the scenes I have created are “emacs + term”, “browser” and “coffee break”.

When I want to show off me fiddling with the issues on github, I switch to the “browser” scene that primarily shows a big browser window (and the chat and the webcam in smaller windows).

When I want to show code, I switch to “emacs + term” that instead shows a terminal and an emacs window (and again the chat and the webcam in smaller windows), and so on.

OBS has built-in support for some of the major streaming services, including twitch, so it’s just a matter of pasting in a key in an input field, press ‘start streaming’ and go!

The rest of the software is the stuff I normally use anyway for developing. I don’t fake anything and I don’t make anything up. I use emacs, make, terminals, gdb etc. Everything this runs on my primary desktop Debian Linux machine that has 32GB of ram, an older i7-3770K CPU at 3.50GHz with a dual screen setup. The video of me is captured with a basic Logitech C270 webcam and the sound of my voice and the keyboard is picked up with my Sennheiser PC8 headset.

Some viewers have asked me about my keyboard which you can hear. It is a FUNC-460 that is now approaching 5 years, and I know for a fact that I press nearly 7 million keys per year.

Coffee

In a reddit post about my live-streaming, user ‘digitalsin’ suggested “Maybe don’t slurp RIGHT INTO THE FUCKING MIC”.

How else am I supposed to have my coffee while developing?