curl 7.60.0 is released. Remember 7.59.0? This latest release cycle was a week longer than normal since the last was one week shorter and we had this particular release date adapted to my traveling last week. It gave us 63 days to cram things in, instead of the regular 56 days.

7.60.0 is a crazy version number in many ways. We’ve been working on the version 7 series since virtually forever (the year 2000) and there’s no version 8 in sight any time soon. This is the 174th curl release ever.

I believe we shouldn’t allow the minor number to go above 99 (because I think it will cause serious confusion among users) so we should come up with a scheme to switch to version 8 before 7.99.0 gets old. If we keeping doing a new minor version every eight weeks, which seems like the fastest route, math tells us that’s a mere 6 years away.

Numbers

In the 63 days since the previous release, we have done and had..

3 changes

111 bug fixes (total: 4,450)

166 commits (total: 23,119)

2 new curl_easy_setopt() options (total: 255)

1 new curl command line option (total: 214)

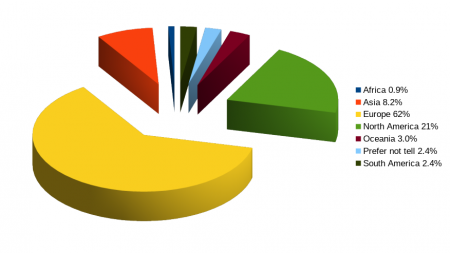

64 contributors, 36 new (total: 1,741)

42 authors (total: 577)

2 security fixes (total: 80)

What good does 7.60.0 bring?

Our tireless and fierce army of security researches keep hammering away at every angle of our code and this has again unveiled vulnerabilities in previously released curl code:

- FTP shutdown response buffer overflow: CVE-2018-1000300

When you tell libcurl to use a larger buffer size, that larger buffer size is not used for the shut down of an FTP connection so if the server then sends back a huge response during that sequence, it would buffer-overflow a heap based buffer.

2. RTSP bad headers buffer over-read: CVE-2018-1000301

The header parser function would sometimes not restore a pointer back to the beginning of the buffer, which could lead to a subsequent function reading out of buffer and causing a crash or potential information leak.

There are also two new features introduced in this version:

HAProxy protocol support

HAProxy has pioneered this simple protocol for clients to pass on meta-data to the server about where it comes from; designed to allow systems to chain proxies / reverse-proxies without losing information about the original originating client. Now you can make your libcurl-using application switch this on with CURLOPT_HAPROXYPROTOCOL and from the command line with curl’s new –haproxy-protocol option.

Shuffling DNS addresses

Over six years ago, I blogged on how round robin DNS doesn’t really work these days. Once upon the time the gethostbyname() family of functions actually returned addresses in a sort of random fashion, which made clients use them in an almost random fashion and therefore they were spread out on the different addresses. When getaddrinfo() has taken over as the name resolving function, it also introduced address sorting and prioritizing, in a way that effectively breaks the round robin approach.

Now, you can get this feature back with libcurl. Set CURLOPT_DNS_SHUFFLE_ADDRESSES to have the list of addresses shuffled after resolved, before they’re used. If you’re connecting to a service that offer several IP addresses and you want to connect to one of those addresses in a semi-random fashion, this option is for you.

There’s no command line option to switch this on. Yet.

Bug fixes

We did many bug fixes for this release as usual, but some of my favorite ones this time around are…

improved pending transfers for HTTP/2

libcurl-using applications that add more transfers than what can be sent over the wire immediately (usually because the application as set some limitation of the parallelism libcurl will do) can be held “pending” by libcurl. They’re basically kept in a separate queue until there’s a chance to send them off. They will then be attempted to get started when the streams than are in progress end.

The algorithm for retrying the pending transfers were quite naive and “brute-force” which made it terribly slow and in effective when there are many transfers waiting in the pending queue. This slowed down the transfers unnecessarily.

With the fixes we’ve landed in7.60.0, the algorithm is less stupid which leads to much less overhead and for this setup, much faster transfers.

curl_multi_timeout values with threaded resolver

When using a libcurl version that is built to use a threaded resolver, there’s no socket to wait for during the name resolving phase so we’ve often recommended users to just wait “a short while” during this interval. That has always been a weakness and an unfortunate situation.

Starting now, curl_multi_timeout() will return suitable timeout values during this period so that users will no longer have to re-implement that logic themselves. The timeouts will be slowly increasing to make sure fast resolves are detected quickly but slow resolves don’t consume too much CPU.

much faster cookies

The cookie code in libcurl was keeping them all in a linear linked list. That’s fine for small amounts of cookies or perhaps if you don’t manipulate them much.

Users with several hundred cookies, or even thousands, will in 7.60.0 notice a speed increase that in some situations are in the order of several magnitudes when the internal representation has changed to use hash tables and some good cleanups were made.

HTTP/2 GOAWAY-handling

We figure out some problems in libcurl’s handling of GOAWAY, like when an application wants to do a bunch of transfers over a connection that suddenly gets a GOAWAY so that libcurl needs to create a new connection to do the rest of the pending transfers over.

Turns out nginx ships with a config option named http2_max_requests that sets the maximum number of requests it allows over the same connection before it sends GOAWAY over it (and it defaults to 1000). This option isn’t very well explained in their docs and it seems users won’t really know what good values to set it to, so this is probably the primary reason clients see GOAWAYs where there’s no apparent good reason for them.

Setting the value to a ridiculously low value at least helped me debug this problem and improve how libcurl deals with it!

Repair non-ASCII support

We’ve supported transfers with libcurl on non-ASCII platforms since early 2007. Non-ASCII here basically means EBCDIC, but the code hasn’t been limited to those.

However, due to this being used by only a small amount of users and that our test infrastructure doesn’t test this feature good enough, we slipped recently and broke libcurl for the non-ASCII users. Work was put in and changes were landed to make sure that libcurl works again on these systems!

Enjoy 7.60.0! In 56 days there should be another release to play with…