Welcome to take the next step with us in this never-ending stroll.

Release presentation

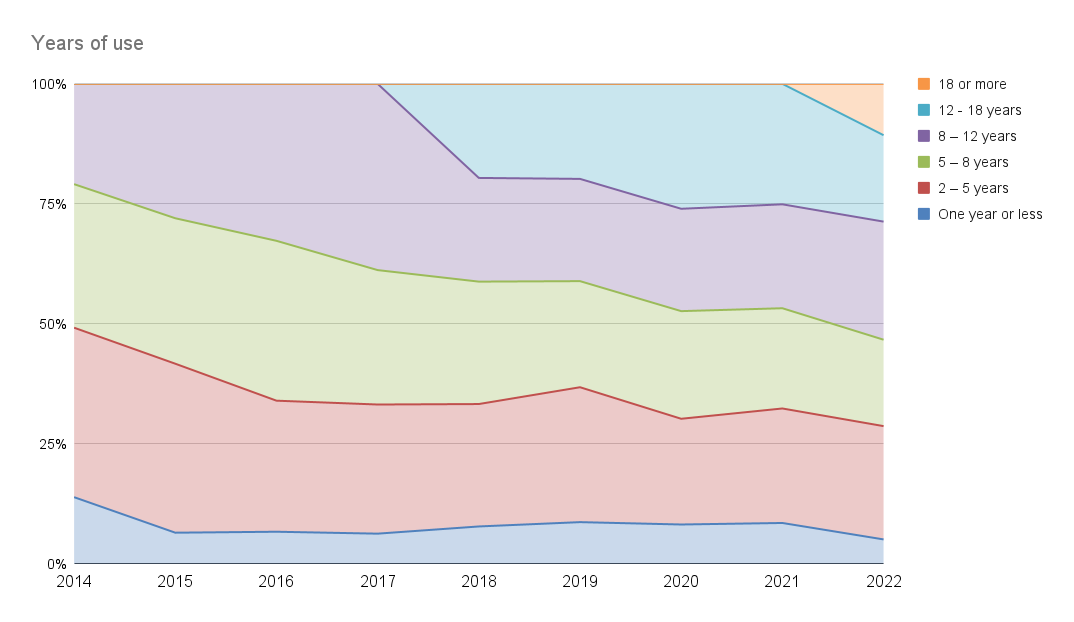

Numbers

the 209th release

8 changes

47 days (total: 8,865)

123 bug-fixes (total: 7,980)

214 commits (total: 28,787)

0 new public libcurl function (total: 88)

2 new curl_easy_setopt() option (total: 297)

1 new curl command line option (total: 248)

51 contributors, 20 new (total: 2,652)

35 authors, 13 new (total: 1,043)

4 security fixes (total: 125)

Bug Bounties total: 34,660 USD

Security

This is another release in which scrutinizing eyes have been poking around and found questionable code paths that could be lead to insecurities. We announce four new security advisories this time – all found and reported by Harry Sintonen. This bumps mr Sintonen’s curl CVE counter up to 17; the number of security problems in curl found and reported by him alone.

CVE-2022-32205: Set-Cookie denial of service

A malicious server can serve excessive amounts of Set-Cookie: headers in a HTTP response to curl and curl stores all of them. A sufficiently large amount of (big) cookies make subsequent HTTP requests to this, or other servers to which the cookies match, create requests that become larger than the threshold that curl uses internally to avoid sending crazy large requests (1048576 bytes) and instead returns an error.

CVE-2022-32206: HTTP compression denial of service

curl supports “chained” HTTP compression algorithms, meaning that a server response can be compressed multiple times and potentially with different algorithms. The number of acceptable “links” in this “decompression chain” was unbounded, allowing a malicious server to insert a virtually unlimited number of compression steps.

CVE-2022-32207: Unpreserved file permissions

When curl saves cookies, alt-svc and hsts data to local files, it makes the operation atomic by finalizing the operation with a rename from a temporary name to the final target file name.

In that rename operation, it might accidentally widen the permissions for the target file, leaving the updated file accessible to more users than intended.

CVE-2022-32208: FTP-KRB bad message verification

When curl does FTP transfers secured by krb5, it handles message verification failures wrongly. This flaw makes it possible for a Man-In-The-Middle attack to go unnoticed and even allows it to inject data to the client.

Changes

We have no less than eight different changes logged this time. Two are command line changes and the rest are library side.

--rate

This new command line option rate limits the number of transfers per time period.

deprecate --random-file and --egd-file

These are two options that have not been used by anyone for an extended period of time, and starting now they have no functionality left. Using them has no effect.

curl_global_init() is threadsafe

Finally, and this should be conditioned to say that the function is only thread-safe on most platforms.

curl_version_info: adds CURL_VERSION_THREADSAFE

The point here is that you can check if global init is thread-safe in your particular libcurl build.

CURLINFO_CAPATH/CAINFO: get default CA paths

As the default values for these values are typically figured out and set at build time, applications might appreciate being able to figure out what they are set to by default.

CURLOPT_SSH_HOSTKEYFUNCTION

For libssh2 enabled builds, you can now set a callback for hostkey verification.

deprecate RANDOM_FILE and EGDSOCKET

The libcurl version of the change mentioned above for the command line. The CURLOPT_RANDOM_FILE and CURLOPT_EGDSOCKET options no longer do anything. They most probably have not been used by any application for a long time.

unix sockets to socks proxy

You can now tell (lib)curl to connect to a SOCKS proxy using unix domain sockets instead of traditional TCP.

Bugfixes

We merged way over a hundred bugfixes in this release. Below are descriptions of some of the fixes I think are particularly interesting to highlight and know about.

improved cmake support for libpsl and libidn2

more powers to the cmake build

address cookie secure domain overlay

Addressed issues when identically named cookies marked secure are loaded over HTTPS and then again over HTTP and vice versa. Cookies are complicated.

make repository REUSE compliant

Being REUSE compliant makes we now have even better order and control of the copyright and licenses used in the project.

headers API no longer EXPERIMENTAL

The header API is now officially a full member of the family.

reject overly many HTTP/2 push-promise headers

curl would accept an unlimited number of headers in a HTTP/2 push promise request, which would eventually lead to out of memory – starting now it will instead reject and cancel such ridiculous streams earlier.

restore HTTP header folding behavior

curl broke the previous HTTP header behavior in the 7.83.1 release, and it has now been restored again. As a bonus, the headers API supports folded headers as well. Folding headers being the ones that are the rare (and deprecated) continuation headers that start with a whitespace.

skip fake-close when libssh does the right thing

Previously, libssh would, a little over-ambitiously, close our socket for us but that has been fixed and curl is adjusted accordingly.

check %USERPROFILE% for .netrc on Windows

A few other tools apparently look for and use .netrc if found in the %USERPROFILE% directory, so by making curl also check there, we get better cross tool .netrc behavior.

support quoted strings in .netrc

curl now supports quoted strings in .netrc files so that you can provide spaces and more in an easier way.

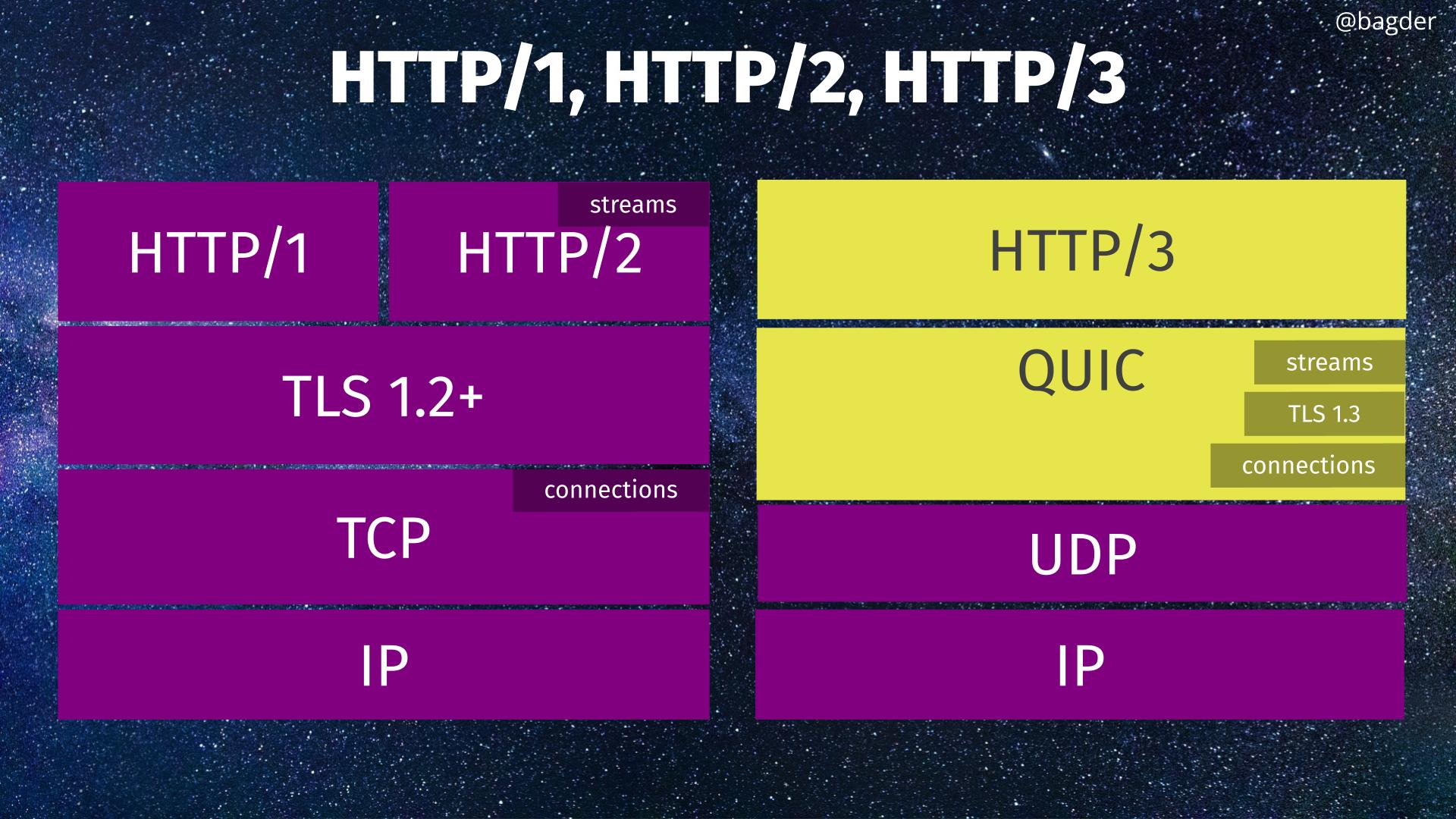

many changes in ngtcp2

There were lots of big and small changes in the HTTP/3 backend powered by ngtcp2.

provide a fixed fake host name in NTLM

curl no longer tries to provide the actual local host name when doing NTLM authentication to reduce information leakage. Instead, curl now uses the same fixed fake host name that Firefox uses when speaking NTLM: WORKSTATION.

return error from “lethal” poll/select errors

A persistent error in select() or poll() could previously be ignored by libcurl and not result in an error code returned to the user, making it loop more than necessary.

strcase optimizations

The case insensitive string comparisons were optimized.

maintain path-as-is after redirects

After a redirect or if doing multi-stage authentication, the --path-as-is status would be dropped.

support CURLU_URLENCODE for curl_url_get

This is useful when for example you ask the API to accept spaces in URLs and you want to later extract a valid URL with such an embedded space URL encoded

Coming next

7.85.0 is scheduled to ship on August 31, 2022.