curl, or libcurl specifically, is probably the world’s most popular and widely used HTTP client side library counting more than six billion installs.

curl is a rock solid and feature-packed library that supports a huge amount of protocols and capabilities that surpass most competitors. But this comes at a cost: it is not the smallest library you can find.

Within a 100K

Instead of being happy with getting told that curl is “too big” for certain use cases, I set a goal for myself: make it possible to build a version of curl that can do HTTPS and fit in 100K (including the wolfSSL TLS library) on a typical 32 bit architecture.

As a comparison, the tiny-curl shared library when built on an x86-64 Linux, is smaller than 25% of the size as the default Debian shipped library is.

FreeRTOS

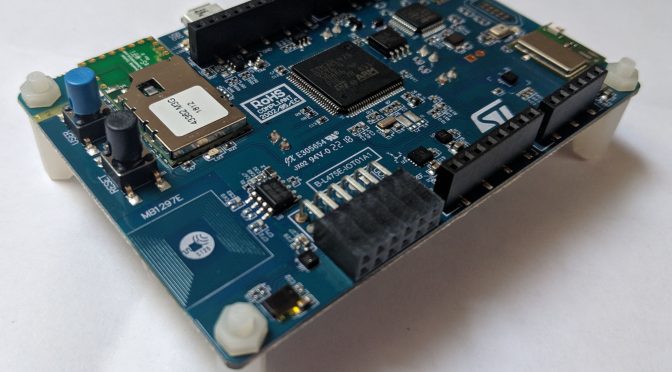

But let’s not stop there. Users with this kind of strict size requirements are rarely running a full Linux installation or similar OS. If you are sensitive about storage to the exact kilobyte level, you usually run a more slimmed down OS as well – so I decided that my initial tiny-curl effort should be done on FreeRTOS. That’s a fairly popular and free RTOS for the more resource constrained devices.

This port is still rough and I expect us to release follow-up releases soon that improves the FreeRTOS port and ideally also adds support for other popular RTOSes. Which RTOS would you like to support for that isn’t already supported?

Offer the libcurl API for HTTPS on FreeRTOS, within 100 kilobytes.

Maintain API

I strongly believe that the power of having libcurl in your embedded devices is partly powered by the libcurl API. The API that you can use for libcurl on any platform, that’s been around for a very long time and for which you can find numerous examples for on the Internet and in libcurl’s extensive documentation. Maintaining support for the API was of the highest priority.

Patch it

My secondary goal was to patch as clean as possible so that we can upstream patches into the main curl source tree for the changes makes sense and that aren’t disturbing to the general code base, and for the work that we can’t we should be able to rebase on top of the curl code base with as little obstruction as possible going forward.

Keep the HTTPS basics

I just want to do HTTPS GET

That’s the mantra here. My patch disables a lot of protocols and features:

- No protocols except HTTP(S) are supported

- HTTP/1 only

- No cookie support

- No date parsing

- No alt-svc

- No HTTP authentication

- No DNS-over-HTTPS

- No .netrc parsing

- No HTTP multi-part formposts

- No shuffled DNS support

- No built-in progress meter

Although they’re all disabled individually so it is still easy to enable one or more of these for specific builds.

Downloads and versions?

Tiny-curl 0.9 is the first shot at this and can be downloaded from wolfSSL. It is based on curl 7.64.1.

Most of the patches in tiny-curl are being upstreamed into curl in the #3844 pull request. I intend to upstream most, if not all, of the tiny-curl work over time.

License

The FreeRTOS port of tiny-curl is licensed GPLv3 and not MIT like the rest of curl. This is an experiment to see how we can do curl work like this in a sustainable way. If you want this under another license, we’re open for business over at wolfSSL!