Mar 31 2020, 11:13:38: I get a message from Frank in the #curl IRC channel over on Freenode. I’m always “hanging out” on IRC and Frank is a long time friend and fellow frequent IRCer in that channel. This time, Frank informs me that the curl web site is acting up:

“I’m getting 403s for some mailing list archive pages. They go away when I reload”

That’s weird and unexpected. An important detail here is that the curl web site is “CDNed” by Fastly. This means that every visitor of the web site is actually going to one of Fastly’s servers and in most cases they get cached content from those servers, and only infrequently do these servers come back to my “origin” server and ask for an updated file to send out to a web site visitor.

A 403 error for a valid page is not a good thing. I started checking out some of my logs – which then only are for the origin as I don’t do any logging at all at CDN level (more about that later) – and I could verify the 403 errors. So they’re in my log meaning it isn’t caused by (a misconfiguration of) the CDN. Why would a perfectly legitimate URL suddenly return 403 to have it go away again after a reload?

Why does he get a 403?

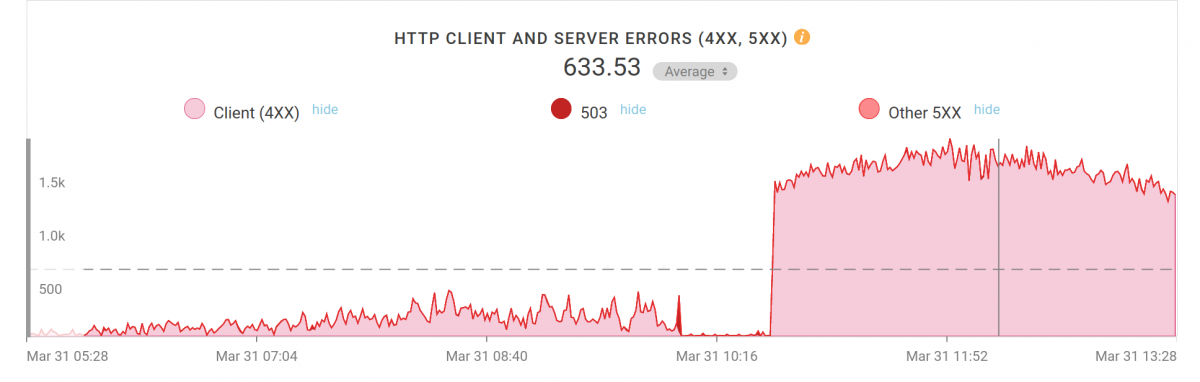

I took a look at Fastly’s management web interface and I spotted that the curl web site was sending out data at an unusual high speed at the moment. An average speed of around 50mbps, while we typically average at below 20. Hm… something is going on.

While I continued to look for the answers to these things I noted that my logs were growing really rapidly. There were POSTs being sent to the same single URL at a high frequency (10-20 reqs/second) and each of those would get some 225Kbytes of data returned. And they all used the same User-agent: QQGameHall. It seems this started within the last 24 hours or so. They’re POSTs so Fastly basically always pass them through to my server.

Before I could figure out Franks’s 403s, I decided to slow down this madness by temporarily forbidding this user-agent access so that the bot or program or whatever would notice it starts to fail, and it would of course then stop bombarding the site.

Deny

Ok, a quick deny of the user-agent made my server start responding with 403s to all those requests and instead of a 225K response it now sent back 465 bytes per request. The average bandwidth on the site immediately dropped down to below 20Mbps again. Back to looking for Frank’s 403-problem

The answer was pretty simple and I didn’t have to search a lot. The clues existed in the error logs and it turned out we had “mod_evasive” enabled since another heavy bot load “attack” a while back. It is a module for “rate limiting” incoming requests and since a lot of requests to our server now comes from Fastly’s limited set of IP addresses and we had this crazy QQ thing hitting us, my server would return a 403 every now and then when it considered the rate too high.

I whitelisted Fastly’s requests and Frank’s 403 problems were solved.

Deny a level up

The bot traffic showed no sign of slowing down. Easily 20 requests per second, to the same URL and they all get an error back and obviously they don’t care. I decided to up my game a little so with help, I moved my blocking of this service to Fastly. I now block their user-agent already there so the traffic doesn’t ever reach my server. Phew, my server was finally back to its regular calm state. They way it should be.

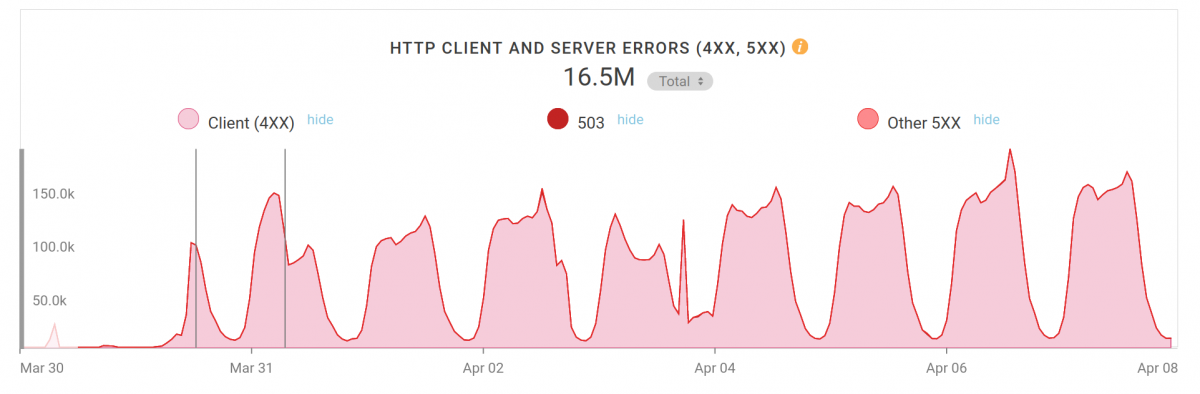

It doesn’t stop there. Here’s a follow-up graph I just grabbed, a little over a week since I started the blocking. 16.5 million blocked requests (and counting). This graph here shows number of requests/hour on the Y axis, peeking at almost 190k; around 50 requests/second. The load is of course not actually a problem, just a nuisance now. QQGameHall keeps on going.

QQGameHall

What we know about this.

Friends on Twitter and googling for this name informs us that this is a “game launcher” done by Tencent. I’ve tried to contact them via Twitter (as I have no means of contacting them otherwise that seems even remotely likely to work).

I have not checked what these user-agent POSTs, because I didn’t log that. I suspect it was just a zero byte POST.

The URL they post to is the CA cert bundle file with provide on the curl CA extract web page. The one we convert from the Mozilla version into a PEM for users of the world to enjoy. (Someone seems to enjoy this maybe just a little too much.)

The user-agents seemed to come (mostly) from China which seems to add up. Also, the look of the graph when it goes up and down could indicate an eastern time zone.

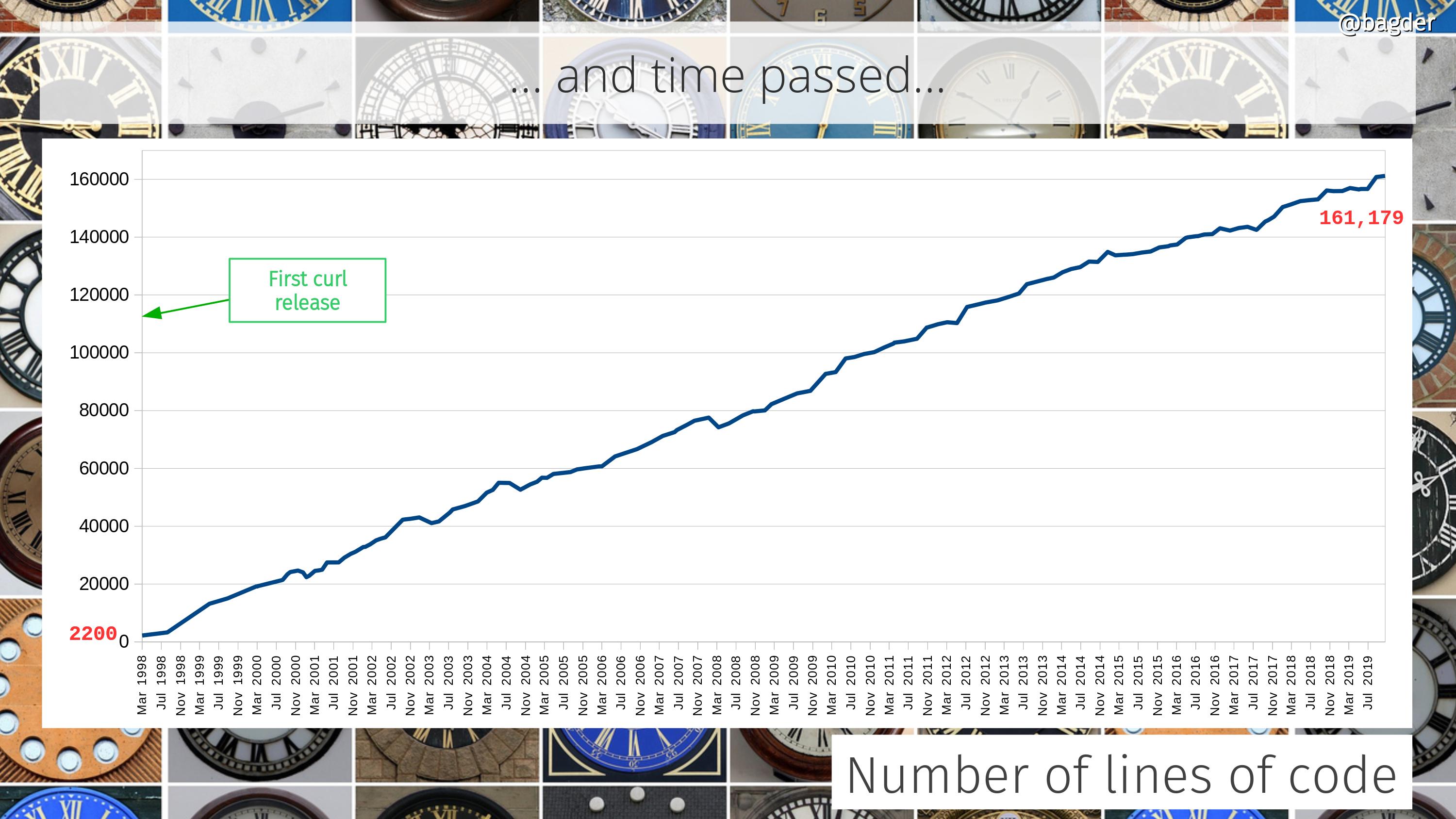

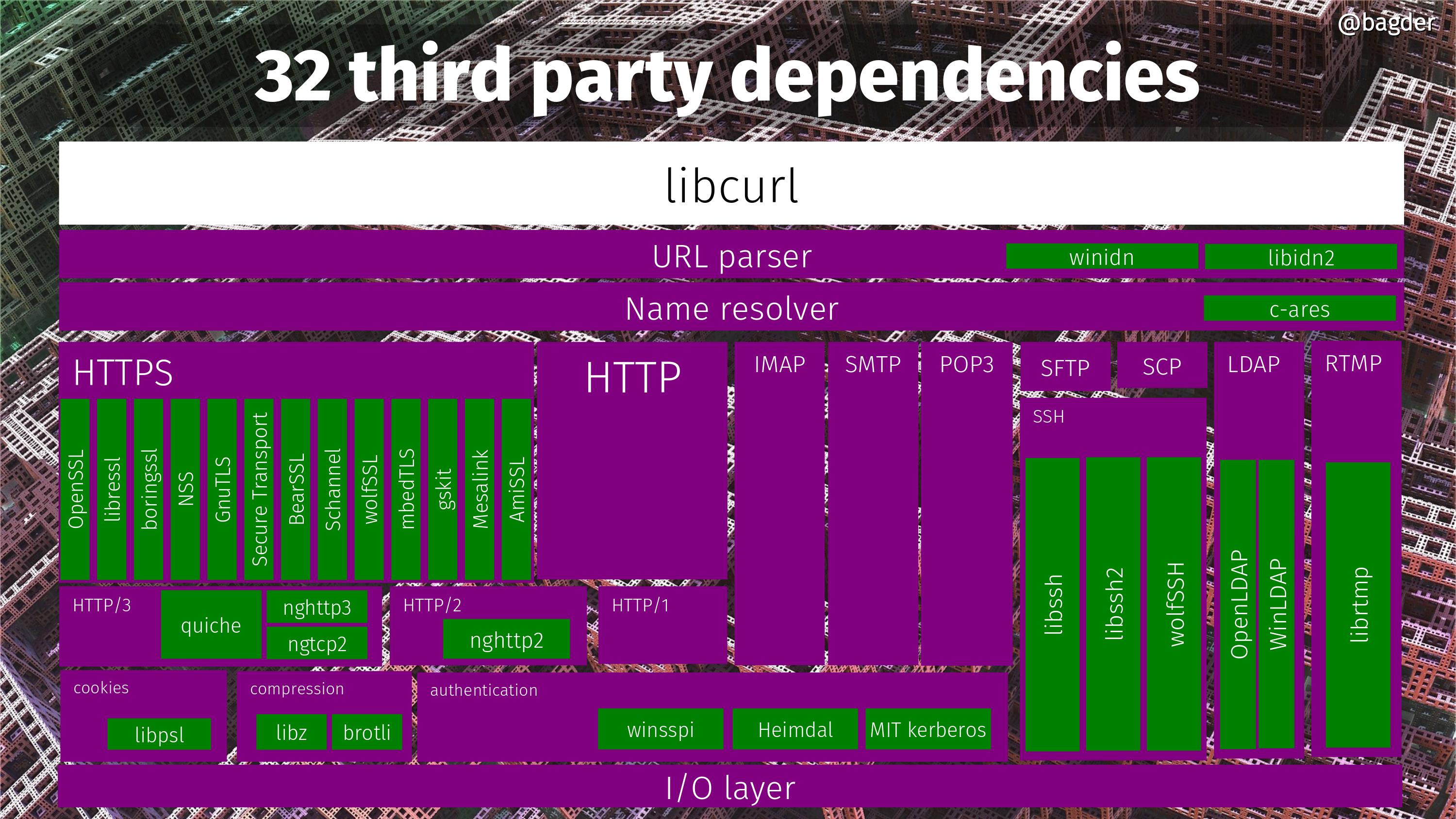

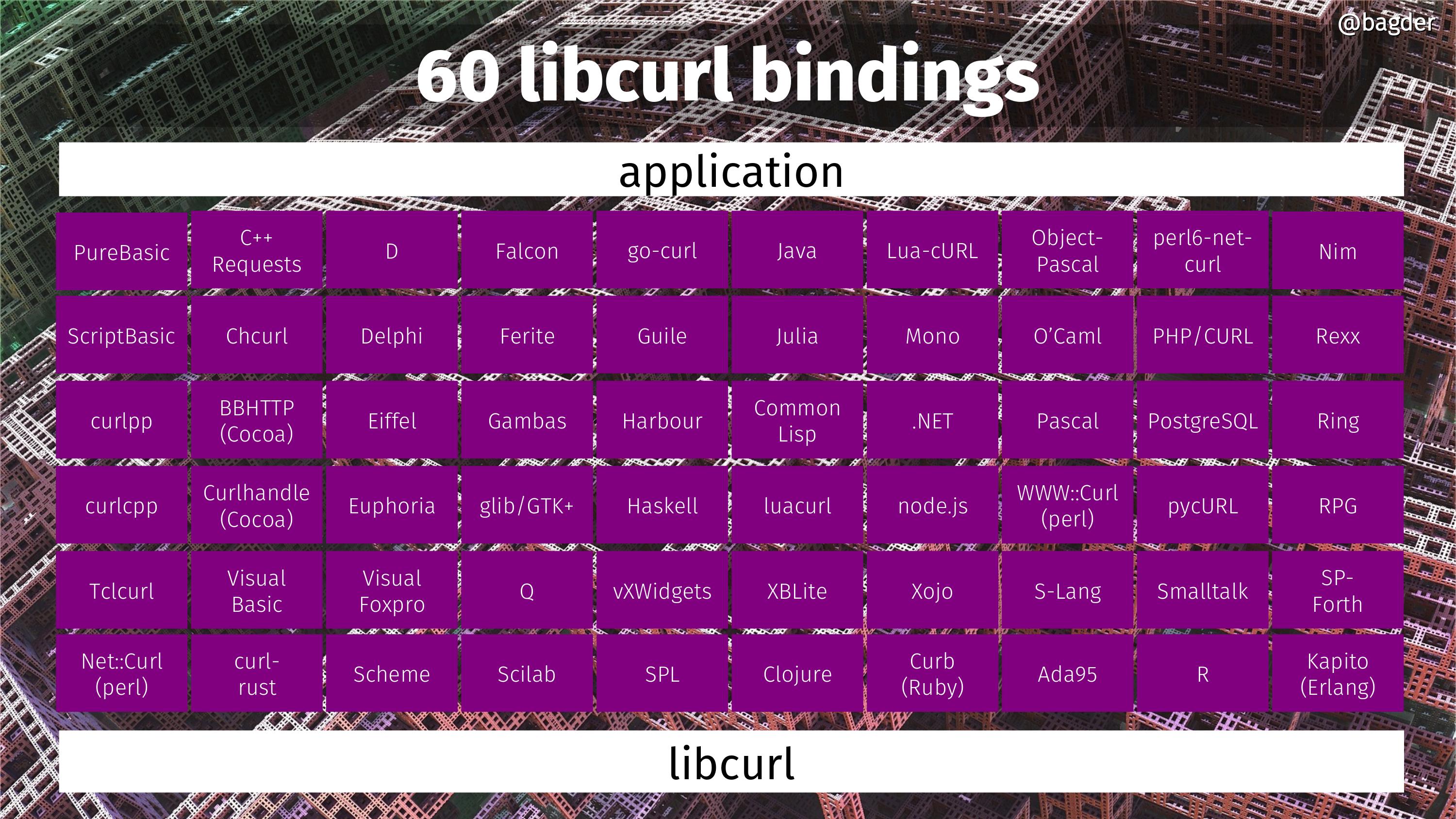

This program uses libcurl. Harry in the #curl channel found files in Virus Total and had a look. It is, I think, therefore highly likely that this “storm” is caused by an application using curl!

My theory: this is some sort of service that was deployed, or an upgrade shipped, that wants to get an updated CA store and they get that from our site with this request. Either they get it far too often or maybe there are just a very large amount them or similar. I cannot understand why they issue a POST though. If they would just have done a GET I would never have noticed and they would’ve fetched perfectly fine cached versions from the CDN…

Feel free to speculate further!

Logging, privacy, analytics

I don’t have any logging of the CDN traffic to the curl site. Primarily because I haven’t had to, but also because I appreciate the privacy gain for our users and finally because handling logs at this volume pretty much requires a separate service and they all seem to be fairly pricey – for something I really don’t want. So therefore I don’t see the source IP addresses these things. (But yes, I can ask Fastly to check and tell me if I really really wanted to know.)

Also: I don’t run any analytics (Google or otherwise) on the site, primarily for privacy reasons. So that won’t give me that data or other clues either.

Update: it has been proposed I could see the IP address in the X-Forwarded-For: headers and it seems accurate. Of course I didn’t log that header during this period but I will consider starting doing it for better control and info in the future.

Update 2: As of May 18 2020, this flood has not diminished. Logs show that we still block about 5 million requests/day from this service, peaking at over 100 requests/minute.

Credits

Top image by Elias Sch. from Pixabay